The Hydrus Project#

It has become traditional for the Brazilian Symposium on Computing Systems Engineering to be held together with the Intel Embedded Systems Contest. For the contest’s 2016 edition, I have teamed up with a couple of friends, Guilherme Pangratz and Êmili Bohrer, and my mentor, Giovani Gracioli, to come up with an exciting entry. Back in April, the project was approved, and development is going on full-steam, so I thought it was time to blog about it.

The proposal#

The state of basic sanitation in Brazil is dire in many places. By investigating ways of improving our ability to detect irregular sewage dumping or other changes in water quality in bays and reservoirs, we decided to create an aquatic drone that navigates and measures water quality autonomously. We named the drone and the project after Hydrus, the “male water snake” constellation of the southern sky.

Our drone is to based on the Galileo Gen 2 board, and should be able to leave its base station, autonomously go to set waypoints, acquire some data, and return home.

Current status#

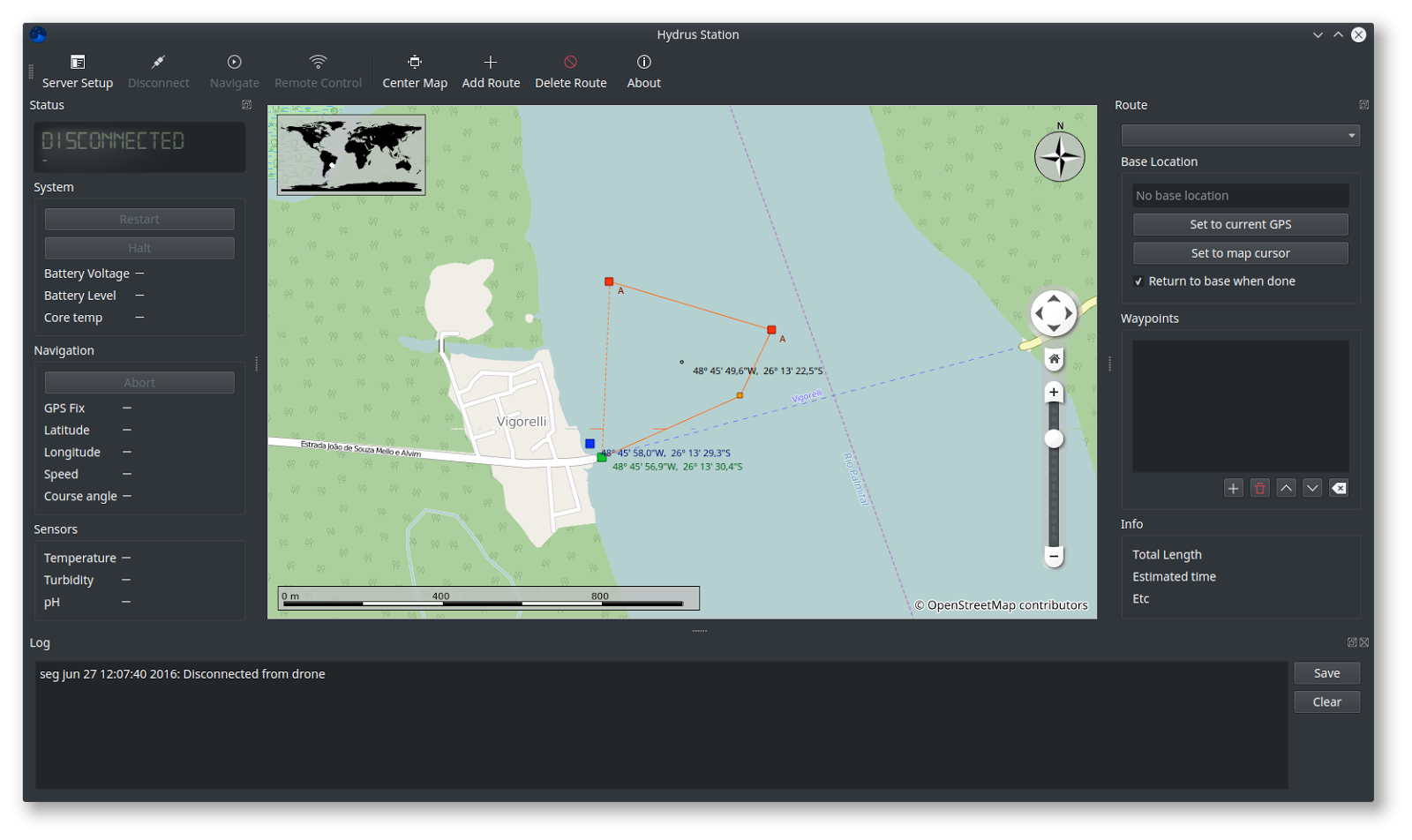

Base station application prototype

Base station application prototype

Hardware: We have created power, GPS and frontend boards for the boat. The frontend has indicator LEDs and a shutdown button, as well as an AMOLED screen for displaying essential system information. The power board contains a very basic power backbone for distributing power to the electronic motor controllers, a battery level sensing circuit, and a simple +5V output for powering the sensors.

Software: The base for our firmware is a cyclic executive task scheduler with a scheduling table, and a global blackboard data structure. There are tasks for system management, navigation, sensing, and communication. Parsing of GPS navigational data is done, as well as motor control and vectoring. Communication with the base station is partially implemented.

Base station software: as of now, the application for the base station can render the current drone position, and display the essential information about the drone, in real time. It can render a preset route atop the map. Missing is the ability to edit the route from the map itself.

What’s next#

There is still a ton of work ahead of us! We haven’t yet received the sensors we ordered, when they arrive they need to be integrated and tested. We are finishing work on a test frame, so that we can implement the navigation next, based on sensor fusion algorithms and a control state machine. Exciting stuff!